The insurance industry is dependent on big data – it is the grease that keeps every component of the machine running. No matter what technological innovations or distribution channels an insurance company has at its disposal, good data and the insights it provides will always be among its most valuable assets.

Big data in insurance is defined as the structured and/or unstructured data used to influence underwriting, rating, pricing, forms, marketing, and claims handling. While insurers have had access to this data previously, it is more actionable than ever thanks to advancements in data analytics, machine learning, and IoT.

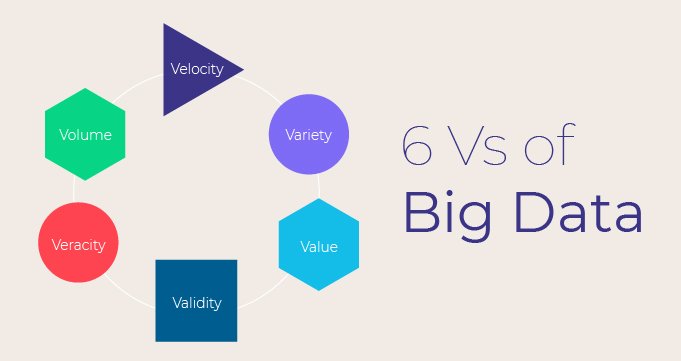

Due to the sheer volume of data available – hence the name big data – the insurance industry has devised a six-factor system to determine the quality of the data they’re analyzing. The 6 Vs of big data provide a helpful framework for insurers to more easily transform their raw data into actionable information.

The 6 Vs of Big Data

1) Volume

The term “big data” is ambiguous, as different insurers have different data storage and analytics capabilities. What might be overwhelming to a smaller regional insurer is just a drop in the bucket for a multinational organization.

When determining the volume of big data insurers can handle, they need to look at both their internal systems and the types of data they’re working with. Cloud vs. on-premises data storage systems will impact a carriers’ processing power, security, and analytics capabilities for big data. Siloed systems are an obstacle that diminishes the volume of big data they can handle.

2) Velocity

When insurers look at the amount of big data they have and are continuing to collect, they should next consider the velocity at which it can be used. The speed of big data generation, collection, and refresh are the primary issues insurers will encounter with big data.

Big data today is generated at an almost incomprehensible pace. According to Forbes, 90 percent of all the data in the world was created in just the last two years, and we currently create approximately 2.5 quintillion bytes of data a day. Insurers need systems that can quickly aggregate this volume of data and continue to update caches so data analytics can perform at its optimal level.

3) Variety

The variety of information available to insurers is what spurred the growth of big data. Big data in insurance refers to two types of data – structured and unstructured. Structured data is easy to understand (think numbers, text, etc.), commonly used data collected from customers, including:

- Name

- Address

- Car make & model

- Dates

- Claims history

- Etc.

This type of data and personal information is easily categorized and organized in tables and charts for instant consumption and analysis. But the larger benefit of big data is the unstructured content now available.

Unstructured data is the unconventional information insurers can collect and use to impact all areas of business, such as policies, fraud detection, and customer experience. This information comes from data analytics and machine learning focused on content from social media, multimedia, written reports, and even wearable technologies.

The so-called Internet of Things (IoT) now empowers insurers to use unstructured data to paint an ever more complete picture of each customer, and to gain deeper insights into overall operations. And because it is generated from devices and platforms that never turn off, carriers have 24/7/365 access to a nonstop supply of unstructured data.

4) Veracity

Veracity is defined as conformity to facts, so in terms of big data, veracity refers to confidence in, and trustworthiness of, said data. When dealing with big data, this is somewhat of a double-edged sword – because there are such vast amounts of data generated from so many disparate sources, some big data is untrustworthy by default.

As volume, variety, velocity, and value all increase – as well as the other Vs of big data – veracity is more prone to decrease. There is always a slight margin of error with large data sets, but being able to work through them and still make the most accurate decisions possible is key to leveraging big data in insurance. As in any industry, insurers need to apply a built-in skepticism to verify their data and the insights it provides.

5) Validity

While the validity of data may sound similar to data veracity, in this instance we’re asking, “how well does this data relate to the questions and outcomes I am looking for?”

Undoubtedly, there are countless methods of creating and collecting data, all of which have their own benefits and limitations. When collecting big data for insurance, it is important to match the right data sets with the appropriate tasks. Furthermore, insurers should install consistent data practices – so every analysis and report get the same treatment, therefore improving accuracy.

6) Value

Of the 6 Vs of big data in insurance, value is arguably the most important. The value of big data has a two-part consideration – is the data being collected good or bad data, and are insurers making informed, business-driven decisions based on the valuable insights generated from all of this data?

Because big data is generated in massive amounts, the quality of the data can get watered down. Insurers should be focused on good data. But what makes good data? Good data is first and foremost accurate. It is also reliable and consistent, enabling insurers to continue to make data-driven decisions rather than one-off estimations.

The value of big data goes beyond just good and bad data; it’s also about what insurers do with it. Big data in insurance can be leveraged to make accurate decisions about insurance operations such as pricing and risk selection, but it can also influence marketing initiatives and identify emerging trends. Collecting big data is expensive – insurers need to make sure they’re getting their money’s worth.

How to Leverage Big Data in Insurance

The 6 Vs of big data in insurance are all about helping insurers maximize the data they’re collecting. To achieve that, P&C insurers are implementing predictive analytics systems to leverage their big data and glean actionable insights about their customers, operations, and business practices. Predictive analytics can help insurers make data-driven decisions regarding:

- Pricing

- Risk selection

- Potential fraud

- Claims

- New trends

There are many different programs and platforms that help insurers effectively leverage their data. Regardless of the programs they use, insurers must have the proper framework – the 6 Vs – around data collection, management, organization, and processing to ensure the highest quality data gets used in the fastest way possible.